This is a security incident with a happy ending, or at least one that is benign. It all started with an alert from a server indicating an anomaly with “Network Out” traffic from a system under our control. The detection simply showed a system uploading significant amounts of data.

As part of security monitoring we analyze network traffic in/out for sites and systems. The primary function of the analysis is to monitor usage for a specific customer, we are also interested in bigger patterns that serve our threat-hunting capabilities. While any business can perform monitoring of their bandwidth, we add significant monitoring value by performing statistical analysis on groups of customers. Specifically, we look at standard deviation comparisons across cohort. In this case, it is this kind of analysis that made us focus on a specific server, inside a customer account, that was behaving two-standard-deviations outside of norm for that cohort.

Why did we care?

TechBento’s primary directive is to be fiduciaries for our clients. To do this, we believe in providing value that is greater than the sum of all parts. In this example, the value comes from leveraging data in cybersecurity decisions. Unexpected or unnecessary Data Out affects our customers in three ways: it may represent malicious activities such as data leaks or cryptojacking; it affects performance as bandwidth is consumed for a single site; it may affect the bottom line in circumstances where the site pays for excess data in/out.

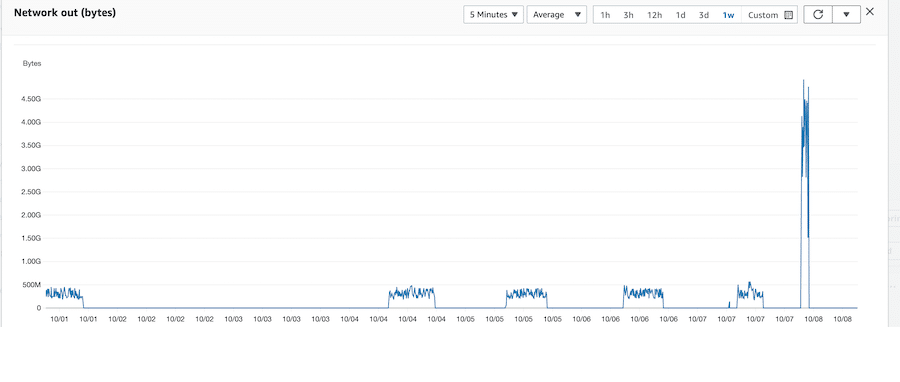

The alarm was triggered and our security team began looking at patterns, part of which is visible below. What’s important to note is the scale on the left indicating as much as 500Mbps transfer out for hours at a time. The site’s total data storage consumed less than 600Gb, and extrapolating this data we were going to potentially that much data pumped out daily. In other words, our validation process made it clear that something was happening.

Image shows a network out graph with peaks of 50Mbps occurring routinely.

What could it be?

Our internal process requires us to put a null hypothesis into every single security incident that is validated. Immediately we struggled to do in this case because — sans other information – this looked malicious, but there was no secondary evidence supporting that. This being one of our more guarded sites had outbound connection filtering, abuse monitoring, anti-malware with behavior monitoring, and user monitoring in place. For this to be malicious the traffic should gone to a malicious IP, and thus flagged by our other security tools. There were no other causes for alarm so we begrudgingly settled to prove the following:

- The malicious traffic is not part of a cryptomining operation, and the site has not been cryptojacked.

- The site is not affected by malicious applications such as those used for leaking information or part of ransomware campaign.

- The site is not connecting to a trusted location.

More importantly, usage patterns suggested this was happening during “working” hours so we postulated that the event was tied to a user session and only occurred when a user (specific or not) logged on.

Digging In…

The malicious traffic is not part of a crypto-mining operation, and the site has not been crypto-jacked.

During a through vulnerability assessment we discovered a potentially severe CVE for one piece of software that would have – potentially – allowed for hostile take over. We also knew the system was public facing during the CVE disclosures – and subsequent update mess – of Microsoft Print Spooler. Both events allowed for the possibility of an exploit, but there was no indication of such so far. To help find evidence we deployed a exploit analysis tool and waited. As soon as we had sessions start and stopped new alarms triggered, and they sufficiently malicious.

"C:\Windows\Microsoft.NET\Framework64\v4.0.30319\csc.exe" /noconfig /fullpaths @"C:\Users\auser\AppData\Local\Temp\12\hop1bgs5.cmdline"

The events suggested that a temporary file was starting a command line, so to prove this we would need to review the command line. Things started to look good when we found lack of supporting files suggesting evasive tactics. Evasive tactics, user profile based runtime, and remote code execution are tall tale signs of crypto-jacking so this looked credible. We began process sampling to capture the command in detail and were quickly disappointed to see:

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -NoProfile -NonInteractive -NoLogo -WindowStyle hidden -ExecutionPolicy U...a\Amazon\EC2-Windows\Launch\Module\Ec2Launch.psd1"; Set-Wallpaper"

All this was was a wall-paper being modified in the user’s desktop. We continued to dig for processes starting on logon but eventually we were unable to disprove the hypothesis and decided that “the site has not been crypto-jacked.”

The site is not affected by malicious applications such as those used for leaking information or part of ransomware campaign.

Next step was to focus on events after the logon. We deployed an application behavior monitoring tool to further isolate and identify an event. Within moments we learned two new things: there was a suspicious and unexpected call to PowerShell and because our behavior monitoring cancelled the call we also broke a mission critical application.

C:\Windows\SysWOW64\WindowsPowerShell\v1.0\powershell.exe C:\Windows\SysWOW64\WindowsPowerShell\v1.0\powershell.exe Remove-OdbcDsn -Name 192.168.1.10TrialWorksSQL -DsnType User

Unfortunately for the investigation, both events were directly tied to each other. As we discovered the mission critical application, TrialWorks Case Management Software, makes a clandestine call to PowerShell to modify SQL Database locality. This was a blow to the investigation as well as a blow to future plan to restrict PowerShell from users, but a good thing for our customer. Another false positive and proof that the site is “is not affected by malicious applications such as those used for leaking information or part of ransomware campaign.”

The site is not connecting to a trusted location.

Slightly exasperated we stuck to the method and had to dig even deeper. We began analyzing every single network call the system made – and there were thousands of them per hour – and cross-checking every public IP with our outbound filters and also with manual lookups. Unable to locate a single connection to a suspicious IP we began looking at trusted IPs, which to this point were excluded. This is where we caught a break: heavy traffic to Microsoft was seen by a single process for a single user – an anomaly all by itself. The traffic was exponentially greater than any other similar process and sufficiently large to represent the bulk of the traffic we were analyzing in the first place. While we were able to disprove the hypothesis and says that “the site is connecting to a trusted location“, the process was somewhat peculiar: OUTLOOK.exe.

The Prestige…

Whomp. If OUTLOOK.exe was compromised then all users would have been affected on this system. Additionally, malicious actions are often like water: they take the easy way out. It would be extraordinarily complicated to devise a scheme where a single OUTLOOK.exe functioned properly for a single user and was somehow nefariously doing activities. The explanation for this, like most incidents, is often quite basic… So, we looked at the user’s Microsoft Outlook. Within moments we found a single e-mail, with a 38Mb attachment, sitting in the Outbox. No errors. No issues. No warnings. Just hanging out for the past few weeks refusing to be sent. Delete and instant fix.

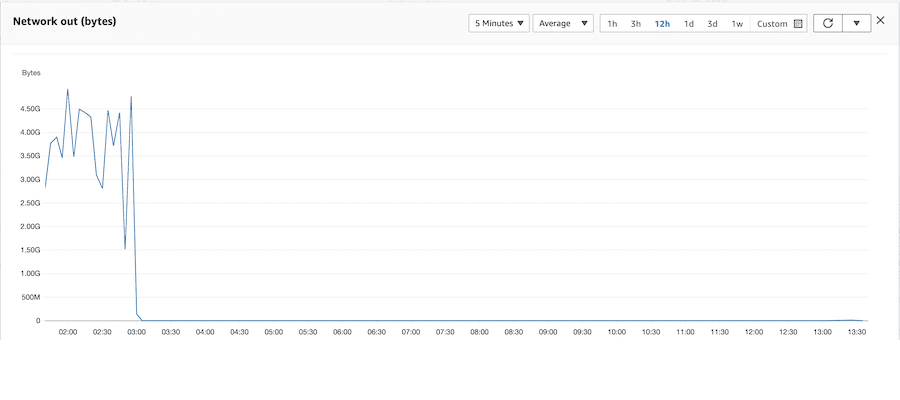

Once deleted the problem email, traffic returned to normal.

This site’s normal load is 10Mbps upload, it was averaging 50Mbps over the entire period. The mystery email was 38Mb and seemingly transferred to Microsoft every second during the working hours of this one user. Removing it instantly showed improvement.

The graph above shows a spike, that’s because we also rebuilt the Outlook profile and let it re-synch the user’s data, but the point is there. Upon cleaning out the corrupt mail item the outbound network traffic returned to normal.

The Lesson:

A single item in the Outbox in Microsoft Outlook can compromise the availability of a site by consuming all available outbound bandwidth.